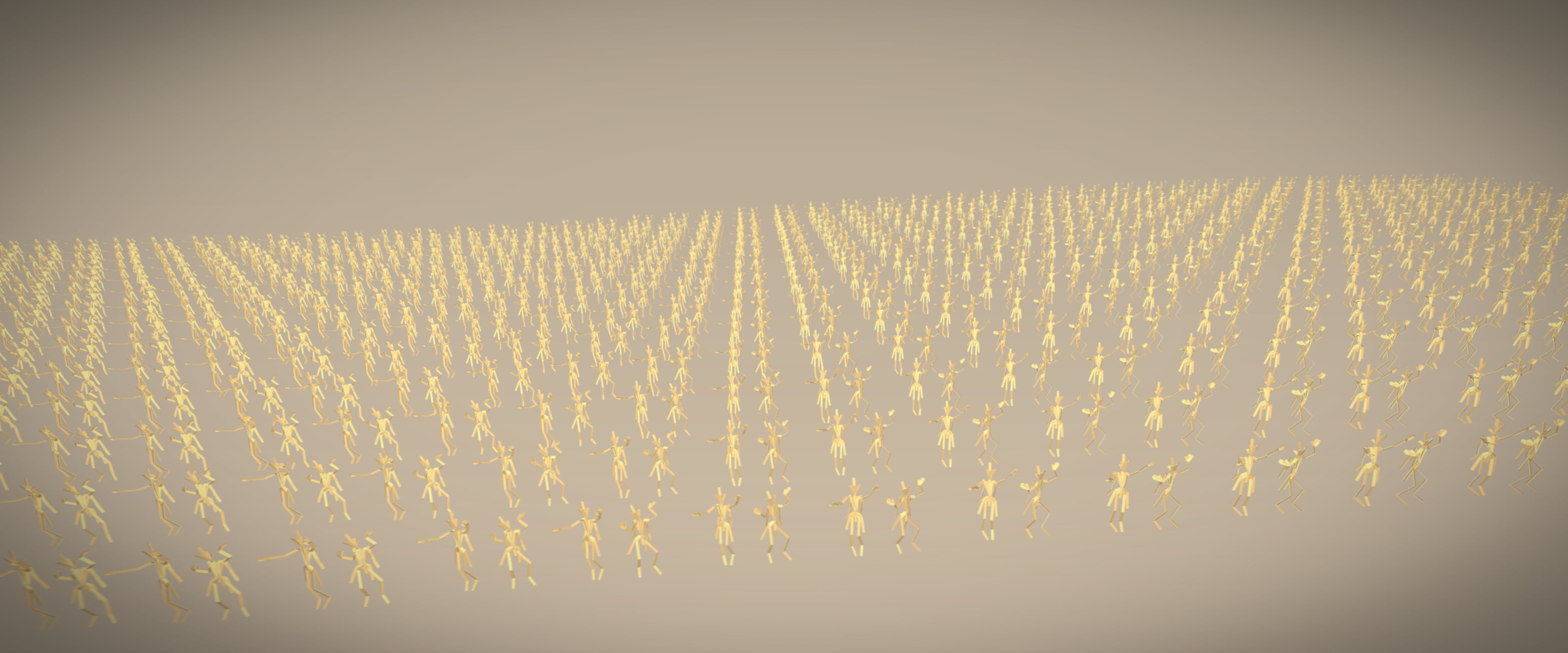

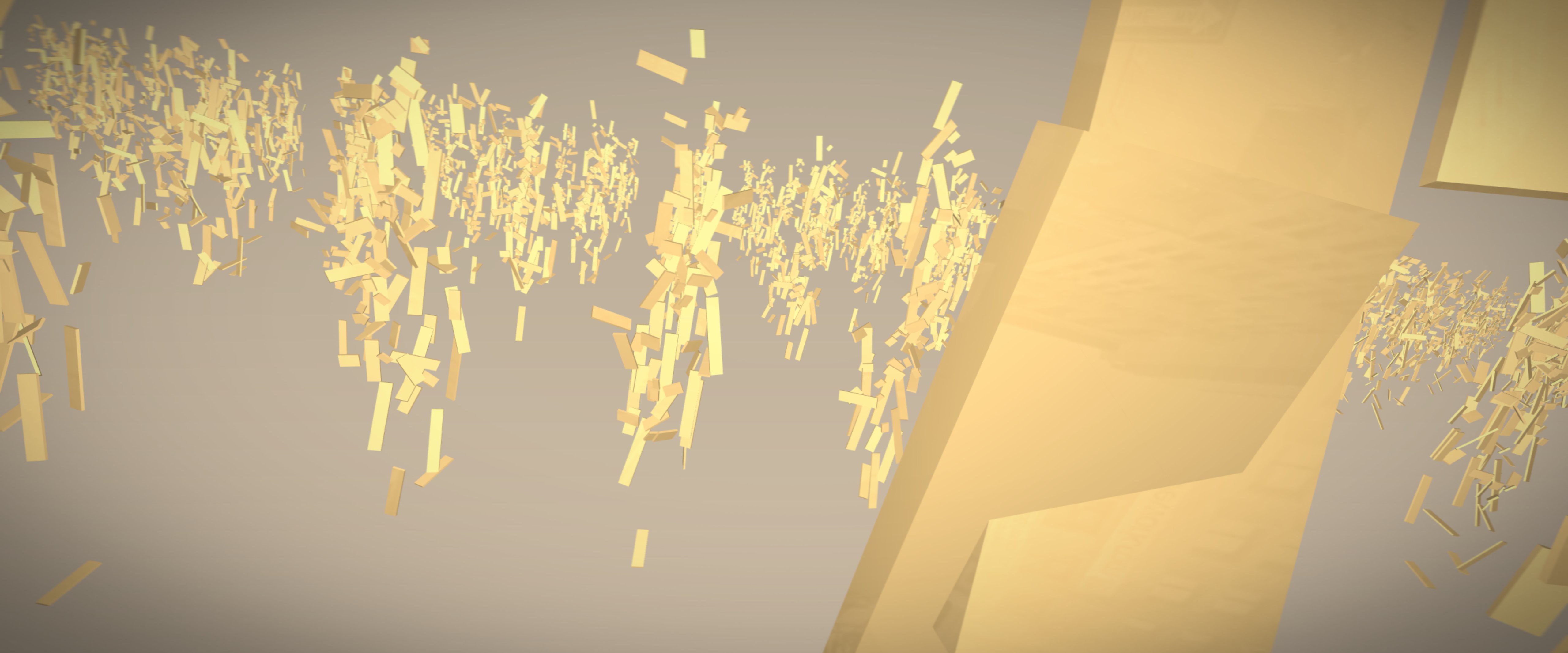

Mass Movement #1

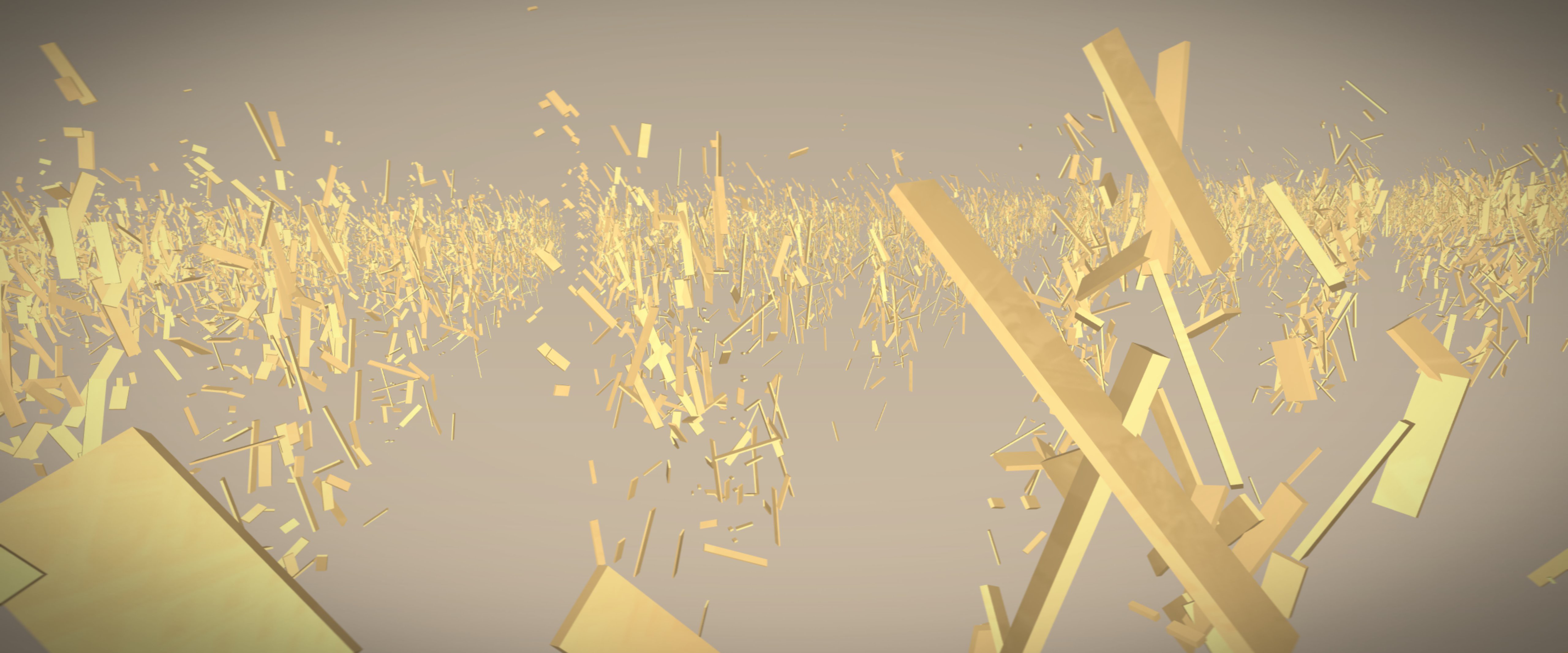

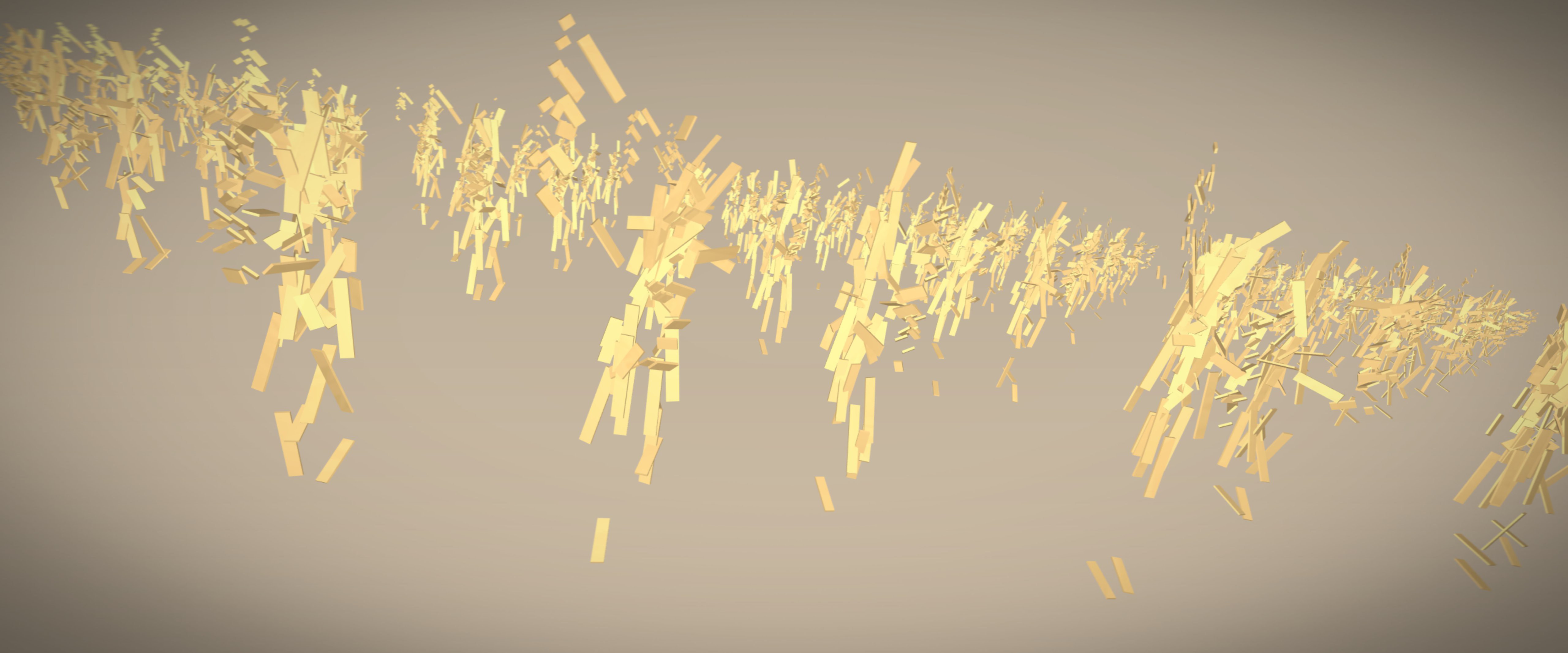

A mass choreography of 1200+ toons, orchestrated by GLSL, performed in realtime on the GPU.

Based on found choreography data from Japanese anime bulletin boards.

Process

In late 2012, I got temporarily sucked into a Japanese pop-culture phenomenon. Someone had posted a 3D character dance choreography to “Gangnam Style” on YouTube. They had added a link to a 3D animation file with a –to me at the time unknown– file extension named ‘.vmd’.

It turned out “.vmd” stood for “Vocaloid Motion Data,” and these files were generated with a piece of software called MikuMiku Dance, or MMD for short. MikuMiku Dance was originally distributed as a freeware giveaway to promote the anime character Hatsune Miku.

At first glance MMD might look outwardly crude, and the GUI in most versions requires you to be able to read Japanese characters. But it is specialised towards dancing. And kids (and probably grown ups) in Japan have used it enthusiastically ever since to create and share choreographies.

After looking up some of the sparse documentation on the file type, and knowing at until which marker to grab the header, I discovered some binary blobs which looked like strings. I suspected they were in Japanese, and was rewarded, when, after some testing, I re-interpreted them as Shift_jis, and pasted the resulting characters into Google Translate. I knew I was onto something when translate came back with “Head” for “頭” and “All of the Parent” for “両目”.

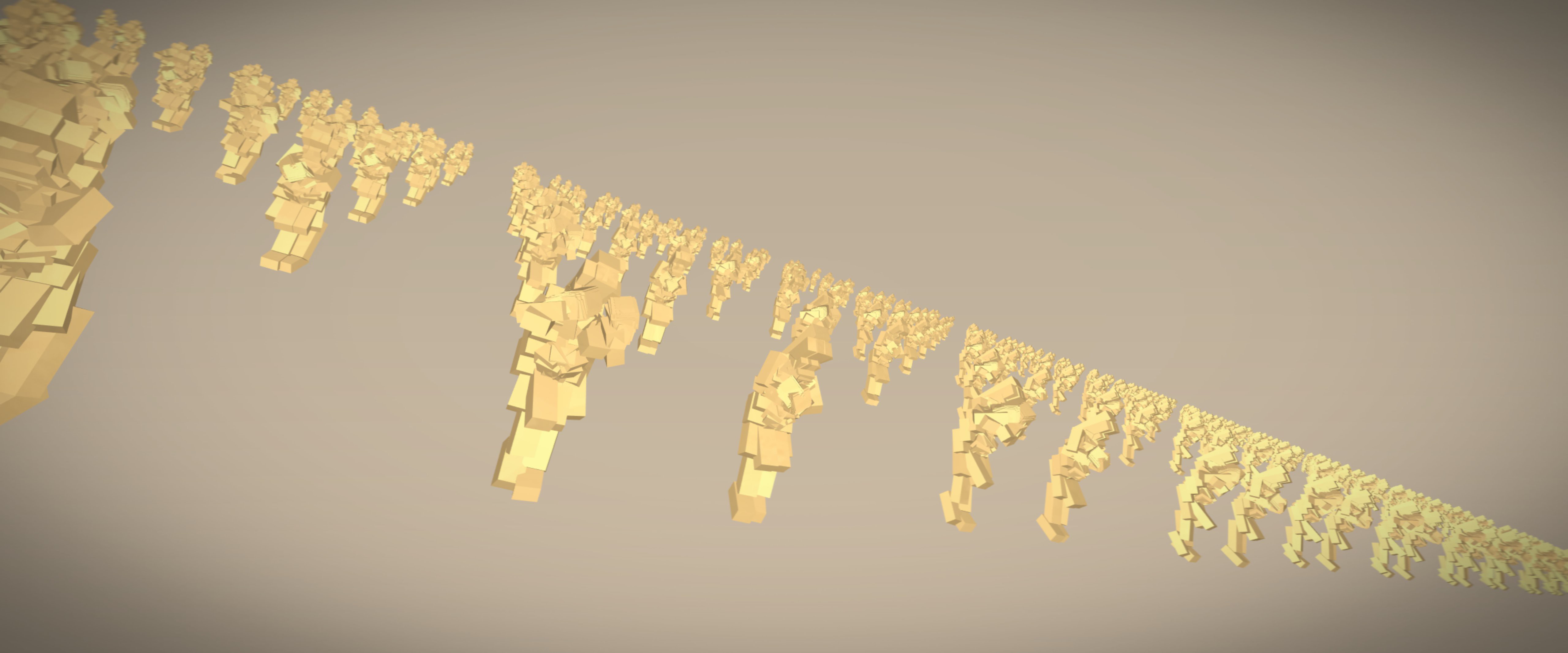

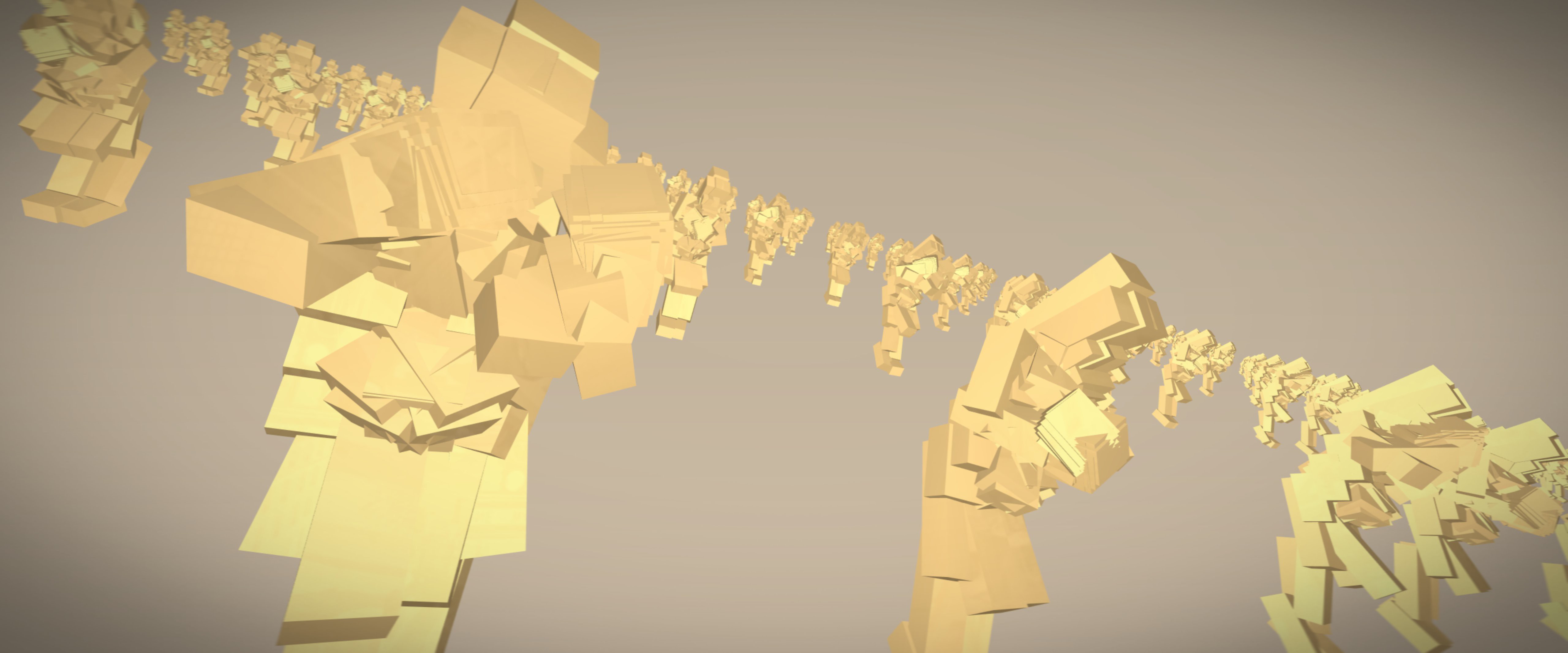

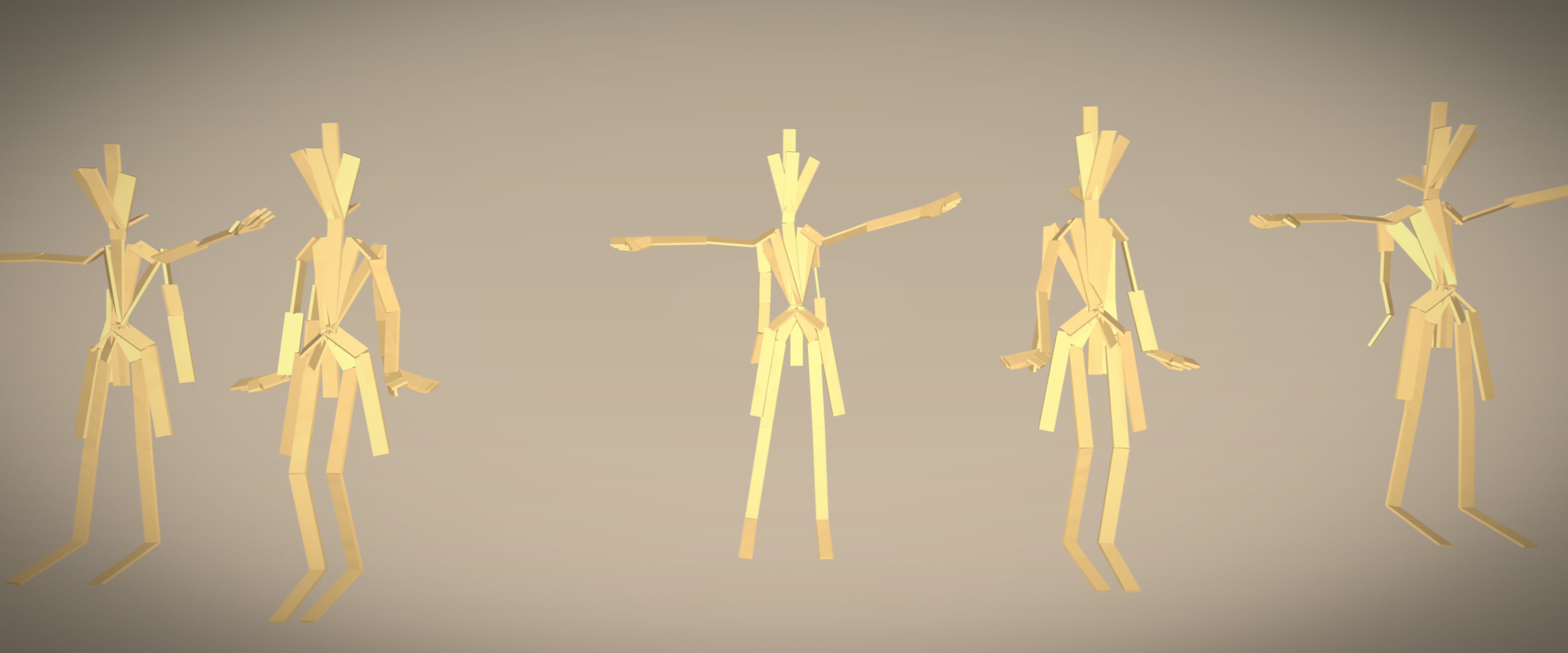

I then found that MMD contains the motion data of a skeleton at regular time frames, which can be linearly interpolated. The skeleton has many nodes, although only about 15 seem to be regularly used, and some nodes (like the ones for feet, and the ones for the bottom-most lock of hair), are connected through simple inverse kinematics chains - probably to render the movement more realistic and varied.

Whole choreographies can be described by tracking a dancer’s skeleton and saving the position and orientation of every single bone at a given time. The human body has about 128 bones. But Pareto’s Principle applies here too, and for skeletal animation this means that you can record pretty decent dance performances while keeping track of just about 15 bones.

Usually, it is quite difficult to find freely available, interesting motion data. With the VMD file format and anime bulletin-boards, and a culture of sharing that lived out there, I found a rich sample of interesting, human-made choreographies to use as a starting point for this piece.

Additional Credits

| Original VMD motion | @Yui-chan (via nicovideo.jp) |

| Music | “Ain’t We got Fun” – Benson Orchestra of Chicago, 1921, transfer from an original cylinder recording. |

| Inverse kinematics help from | Bullet Physics , VPVL |

| Inital file decoding | hexFiend |

| Timeline for video rendering | ofxTimeline by @obviousjim |

Technical Details

3D bone transformations are pre-calculated in C++, and their matrices pushed to an instanced vertex shader. There, the toons are fleshed out, morphed, and distributed in space.

Parts of a custom addon, ofxVboMesh, were later merged into openFrameworks core, making it easier to draw instanced meshes.

Realtime, 60Hz.

Made with openFrameworks.